Arweave: a Potential Defense Against Deepfakes?

One particular issue has all the ingredients of becoming one of the first successful intersections of blockchain technology, real-world necessity, and hardware implementation that could end a problem before dealing with an actual full-blown crisis. I’m talking about deepfake technology and the imminent need to counter it in order to delimitate the truth from the fiction in the coming years.

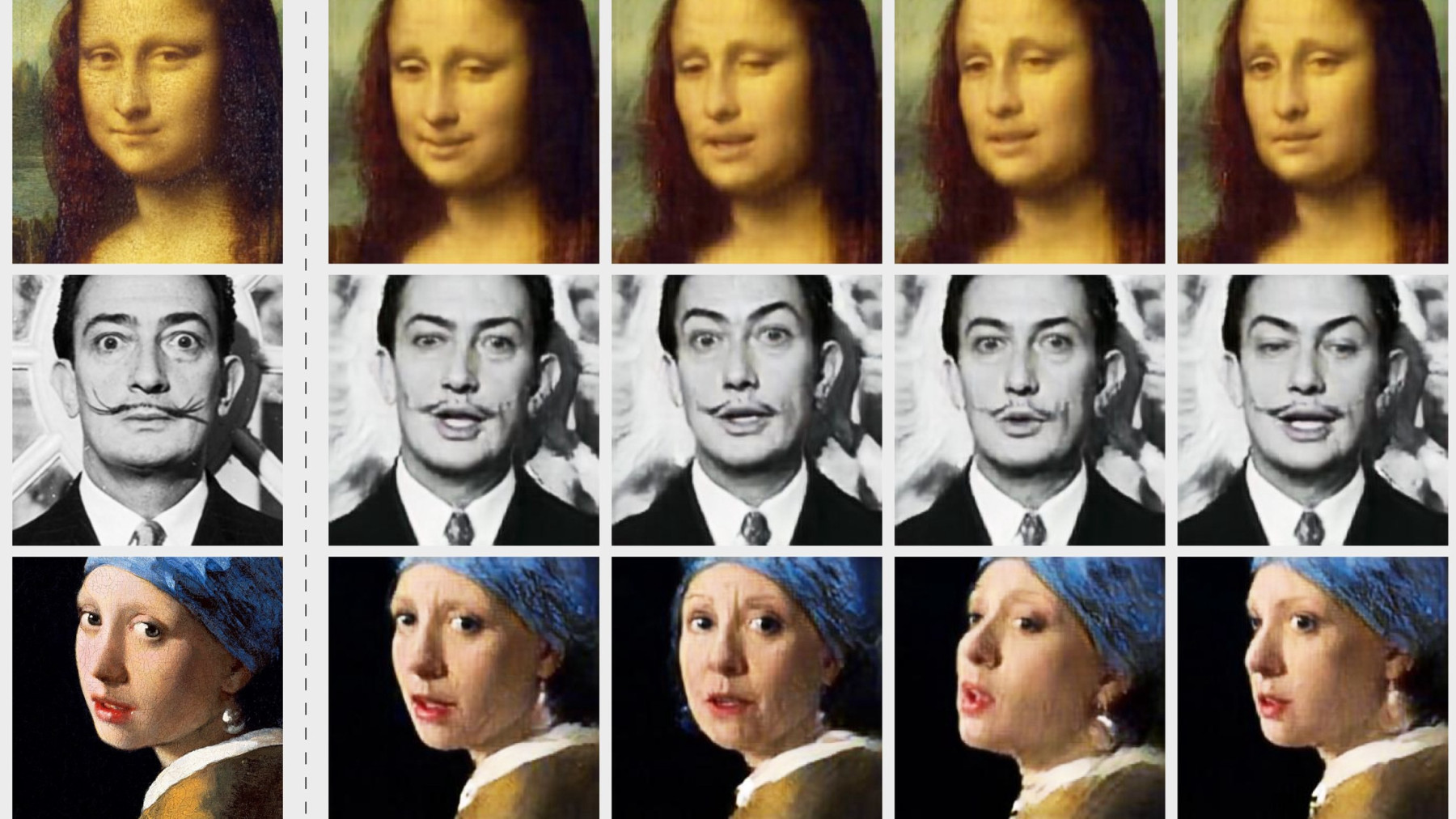

Under no circumstances is a deepfake malicious technology on its own. Like almost any kind of tech, it’s a sword with two edges; its good or evil outcome resides only in its users’ intent. Deepfakes are a form of synthetic media that leverages AI to create the illusion of actual, genuine footage depicting a natural person – like this:

There are many legitimate use cases for this technology, and the research will not come to a halt because of the ethical concerns it raises. The movie industry will continue to improve the tech further to “rejuvenate” actors in certain scenes or fully “resurrect” long-dead movie stars or historical personalities. Our beloved metaverse will rely heavily on AI, pretty much in the same manner as deepfakes do now. No matter how they look, our avatars will copy and/or enhance our way of talking, facial expressions, and mannerisms.

The pandemic was only a catalyst for accelerating the pace at which the tech evolved. Have you heard about NVIDIA’s Maxine? Since 5G is not yet a thing, they built an SDK capable of carrying real-time augmented reality tasks, and in the process, they shrank the bandwidth needed for having an enhanced AR video conference. Instead of sending a stream of images bloated with data that would demand high bandwidth, the AI behind Maxine will only send the location of some facial features, like some points near the mouth, eyes, and nose.

Those will be constructed in moving images on the receiver machine or in the cloud. I used “constructed” and not “reconstructed” on purpose. Theoretically, this tech could reconstruct the message sender’s original face. However, the final construct could render a different look than the original sender’s look and still carry the message.

It’s just a matter of time until we will cheaply and quickly render images, videos, and even live feeds that no one could flag as “fake”, with anybody’s face and voice. This is now a certainty, not just a possibility.

The ugly side

It is pointless to reiterate from other articles the threat that this tech carries. It proved that it could be a worthy adversary of the truth even in its infancy. You can read the Wikipedia page if you need a little intro to its destructive potential.

What it’s worth noting is that disregarding its way of manifestation (fake porn clips, financial fraud, political propaganda, etc.), it could undermine the entire web of trust that keeps our societies, communities, and even families together. Imagine a world in which no one can discern the truth from the lie.

When deepfakes become impossible to distinguish from a genuine clip, there will be a generalized state of plausible deniability. It’s not me in that footage; it’s a deepfake; prove me wrong! It will be the peak of skepticism regarding anything. If you believe that conspiracy theories thrive nowadays, think about how they will spread then.

Arweave as a solution

For years blockchain was seen as a defense against deepfakes. The idea of pairing an open distributed ledger with unique file hashes is recurrent. Unfortunately, web2 being web2, an alternate solution was preferred: counter AI with AI. They tried to teach AI to discern between originals and fakes…the problem resides in the fact that this anti deepfake AI can be easily defeated, even if the attacker only partially knows how the AI was trained.

Our work shows that attacks on deepfake detectors could be a real-world threat.

More alarmingly, we demonstrate that it’s possible to craft robust adversarial deepfakes even when an adversary may not be aware of the inner-workings of the machine learning model used by the detector.

Given the fact that this research was published in 2021, it could be possible that 2022 may be the year when the blockchain will be given a chance to prove what it can do in this matter.

The immutable nature of blockchain, combined with its public character, guarantees that everything written on it couldn’t be tampered with. What Arweave brings to the equation is the fact that now you can store entire files inside a blockchain-like structure. This eradicates one potential point of failure: in the first proposals that envisaged blockchain as a solution to deepfakes, only the hash of a file would have been uploaded (due to obvious storage limitations traditional blockchains carry).

I referred to the notion of “hashes” a few times, so, for clarity, a definition would be nice:

A cryptographic hash function (CHF) is a mathematical algorithm that maps data of an arbitrary size (often called the “message”) to a bit array of a fixed size (the “hash value”, “hash”, or “message digest”).

This means that no matter the length of a file, you could always have an almost unique identifier of a fixed size. (For the sake of mathematics, one can not state that hashes are unique because collisions could happen; a collision means that an algorithm can generate the same hashes for different files – still, the probability of occurrence is ridiculously low even in the case of algorithms like SHA-1.)

In theory, one should store the hash of a file on-chain, and when another party would want to check the provenance of that file, it could rerun the hashing algorithm, obtain a hash and then check the blockchain for that particular hash.

Now, from my point of view, there is no problem with hashes, but when you try to use them in a web2 ecosystem they could become useless.

Let’s say that you take a picture, obtain its hash, then upload the photo to Facebook, and then delete the photo from your device. Poof, the hash will probably be useless. When you upload the picture to Facebook, even if it looks the same, it would probably be compressed or reencoded.

This will happen anywhere on the web; each platform has its methods to save space and deliver a standardized look/user experience through a more extensive range of devices. This practice is not bad on its own, but in our case, even if you modify one bit of data, the returning hash will be different from the original, and the provenance will be lost.

With Arweave, we don’t need to use hashes. We can upload the entire file directly. Even if the file is reencoded to another format, slightly edited, compressed, we will always have the original file to compare with.

Ok, so let’s check again what Arweawe alone has to offer in the pursuit of countering deepfakes:

- Like any blockchain, the content uploaded is immutable and public for potential scrutiny. It grants that the data has been unchanged since the moment of upload.

- Removes the potential point of failure in the sole use of hashes by uploading the entire file (with a bunch of metadata).

- Individuals could quickly review the content on Arweave in a more “humane” way (many people could not simply trust a hash, but with the use of a gateway, everybody could check the link to original footage and compare it to a copy with the naked eye).

Obviously, there is still a missing link to render a total deepfake proof circuit. The time between file creation and the moment it was uploaded on Arweave could represent the perfect window to tamper with it. To create a perfect flow, the file should go straight to Arweave, and only after upload completion, the file could be accessed locally.

And now I’m going in “what if” region.

This could be achieved only by onboarding hardware manufacturers. Imagine encapsulated cameras and microphones that have the sensors directly linked to a chip and a 4G/5G antenna. For years smartphones have carried security chips that protect sensitive data and are completely separated from the rest of the device.

Manufacturers only need to add one extra security chip, with some GB of storage and a simple piece of software installed on them: the raw images captured are stored in a read-only state until those are uploaded on the chain. When upload confirmation comes, the data will be erased from the security chip and delivered to the device’s primary storage, where it can be altered in any wanted way. Full circle.

Now, let’s focus on this particular chip and its other potential uses:

a. Evidently, it has to act like a wallet too. With a bit of tinkering, this chip could become a built-in hardware wallet, the standard for a myriad of mobile devices.

b. So we have a dedicated safe chip, with some processing power and with some storage available – most of the time, both the processing power and the storage will not be used. What if we can rent them? Does it sound familiar? (Imagine blockchain further decentralized at the level of mobile devices, a swarm of tiny nodes, not very persistent on their own – but multiplied in the number of billions… I don’t know why but I feel that guys from Koii Network will have something to say about this vision.)

Potential implications

I believe that the true blockchain revolution will come when hardware manufacturers and data providers will hop in and ease the way for the end user. Web3 will become a thing when the user does not know that they are inside web3: when the pay for permanent storage is in the data provider’s subscription, when they will manage their crypto assets without knowing they are inside an encrypted wallet, when they earn money only for having their phone turned on.

Back to our case, realistically, this fight against deepfakes should start with media content providers. Do you want to be a reputable news agency that streams video feeds? Your devices should be “truth proof”-ready. By the way, it will be interesting to follow the practice of media in general. Given that they will upload the raw footage, everybody could see how televisions edit their content in the future. Are they choosing only the parts that suit their narrative? How impartial are they?

The next step, the normalization and widespread adoption of this tech, is more troublesome. Given the fact that every upload on Arweave carries a cost, it could mean that only those who could afford it would have the “right to be believed”. This problem can be overcome by providing an “entrance” layer to permaweb. A free mempool-like space, immutable but not permanent, from where other peers could pay to upload certain content based on different criteria.

Let’s say, for example, that a kid from a third-world country catches on film an abuse against human rights that will make the front pages of all the big news networks. Even not uploaded yet to Arweave, the footage has all the characteristics needed to be proved authentic. One of the media networks will pay to add it to its permanent archive.

On top of that, we need to develop a specific way of relating to the web, similar to IRL. Not everything is serious or worthy of being deemed as serious. We don’t have to provide proof of authenticity of every file. Sorting the content we produce will make us more aware of our “web footprint,” and we will save a lot in the process.